Radar, electromagnetic sensor used for detecting, locating, tracking, and recognizing objects of various kinds at considerable distances. It operates by transmitting electromagnetic energy toward objects, commonly referred to as targets, and observing the echoes returned from them. The targets may be aircraft, ships, spacecraft, automotive vehicles, and astronomical bodies, or even birds, insects, and rain. Besides determining the presence, location, and velocity of such objects, radar can sometimes obtain their size and shape as well.

First military radars

History of radar

Early experiments

Serious developmental work on radar began in the 1930s, but the basic idea of radar had its origins in the classical experiments on electromagnetic radiation conducted by German physicist Heinrich Hertz during the late 1880s. Hertz set out to verify experimentally the earlier theoretical work of Scottish physicist James Clerk Maxwell. Maxwell had formulated the general equations of the electromagnetic field, determining that both light and radio waves are examples of electromagnetic waves governed by the same fundamental laws but having widely different frequencies. Maxwell’s work led to the conclusion that radio waves can be reflected from metallic objects and refracted by a dielectric medium, just as light waves can. Hertz demonstrated these properties in 1888, using radio waves at a wavelength of 66 cm (which corresponds to a frequency of about 455 MHz).What distinguishes radar from optical and infrared sensing devices is its ability to detect faraway objects under adverse weather conditions and to determine their range, or distance, with precision.First military radars

During the 1930s, efforts to use radio echoes for aircraft detection were initiated independently and almost simultaneously in eight countries that were concerned with the prevailing military situation and that already had practical experience withradio technology. The United States, Great Britain, Germany, France, the Soviet Union, Italy, the Netherlands, and Japan all began experimenting with radar within about two years of one another and embarked, with varying degrees of motivation and success, on its development for military purposes. Several of these countries had some form of operational radar equipment in military service at the start of World War II.

The first observation of the radar effect at the U.S. Naval Research Laboratory (NRL) in Washington, D.C., was made in 1922. NRL researchers positioned a radio transmitter on one shore of the Potomac River and a receiver on the other. A ship sailing on the river unexpectedly caused fluctuations in the intensity of the received signals when it passed between the transmitter and receiver. (Today such a configuration would be called bistatic radar.) In spite of the promising results of this experiment, U.S. Navy officials were unwilling to sponsor further work.

The principle of radar was “rediscovered” at NRL in 1930 when L.A. Hyland observed that an aircraft flying through the beam of a transmitting antenna caused a fluctuation in the received signal. Although Hyland and his associates at NRL were enthusiastic about the prospect of detecting targets by radio means and were eager to pursue its development in earnest, little interest was shown by higher authorities in the navy. Not until it was learned how to use a single antenna for both transmitting and receiving (now termed monostatic radar) was the value of radar for detecting and tracking aircraft and ships fully recognized. Such a system was demonstrated at sea on the battleship USS New York in early 1939.

The first radars developed by the U.S. Army were the SCR-268 (at a frequency of 205 MHz) for controlling antiaircraft gunfire and the SCR-270 (at a frequency of 100 MHz) for detecting aircraft. Both of these radars were available at the start of World War II, as was the navy’s CXAM shipboard surveillance radar (at a frequency of 200 MHz). It was an SCR-270, one of six available in Hawaii at the time, that detected the approach of Japanese warplanes toward Pearl Harbor, near Honolulu, on December 7, 1941; however, the significance of the radar observations was not appreciated until bombs began to fall.

Britain commenced radar research for aircraft detection in 1935. The British government encouraged engineers to proceed rapidly because it was quite concerned about the growing possibility of war. By September 1938 the first British radar system, the Chain Home, had gone into 24-hour operation, and it remained operational throughout the war. The Chain Home radars allowed Britain to deploy successfully its limited air defenses against the heavy German air attacks conducted during the early part of the war. They operated at about 30 MHz—in what is called the shortwave, or HF, band—which is actually quite a low frequency for radar. It might not have been the optimum solution, but the inventor of British radar, SirRobert Watson-Watt, believed that something that worked and was available was better than an ideal solution that was only a promise or might arrive too late.

The Soviet Union also started working on radar during the 1930s. At the time of the German attack on their country in June 1941, the Soviets had developed several different types of radars and had in production an aircraft-detection radar that operated at 75 MHz (in the very-high-frequency [VHF] band). Their development and manufacture of radar equipment was disrupted by the German invasion, and the work had to be relocated.

At the beginning of World War II, Germany had progressed farther in the development of radar than any other country. The Germans employed radar on the ground and in the air for defense against Allied bombers. Radar was installed on a German pocket battleship as early as 1936. Radar development was halted by the Germans in late 1940 because they believed the war was almost over. The United States and Britain, however, accelerated their efforts. By the time the Germans realized their mistake, it was too late to catch up.

Except for some German radars that operated at 375 and 560 MHz, all of the successful radar systems developed prior to the start of World War II were in the VHFband, below about 200 MHz. The use of VHF posed several problems. First, VHF beamwidths are broad. (Narrow beamwidths yield greater accuracy, better resolution, and the exclusion of unwanted echoes from the ground or other clutter.) Second, the VHF portion of the electromagnetic spectrum does not permit the wide bandwidths required for the short pulses that allow for greater accuracy in range determination. Third, VHF is subject to atmospheric noise, which limits receiver sensitivity. In spite of these drawbacks, VHF represented the frontier of radio technology in the 1930s, and radar development at this frequency range constituted a genuine pioneering accomplishment. It was well understood by the early developers of radar that operation at even higher frequencies.

Fundamentals of radar

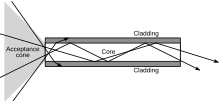

Radar typically involves the radiating of a narrow beam of electromagnetic energy into space from an antenna (see the figure). The narrow antenna beam scans a region where targets are expected. When a target is illuminated by the beam, it intercepts some of the radiated energy and reflects a portion back toward the radar system. Since most radar systems do not transmit and receive at the same time, a single antenna is often used on a time-shared basis for both transmitting and receiving.

A receiver attached to the output element of the antenna extracts the desired reflected signals and (ideally) rejects those that are of no interest. For example, a signal of interest might be the echo from an aircraft. Signals that are not of interest might be echoes from the ground or rain, which can mask and interfere with the detection of the desired echo from the aircraft. The radar measures the location of the target in range and angular direction. Range, or distance, is determined by measuring the total time it takes for the radar signal to make the round trip to the target and back (see below). The angular direction of a target is found from the direction in which the antenna points at the time the echo signal is received. Through measurement of the location of a target at successive instants of time, the target’s recent track can be determined. Once this information has been established, the target’s future path can be predicted. In many surveillance radar applications, the target is not considered to be “detected” until its track has been established.

Radar imaging

Radar can distinguish one kind of target from another (such as a bird from an aircraft), and some systems are able to recognize specific classes of targets (for example, a commercial airliner as opposed to a military jet fighter). Target recognition is accomplished by measuring the size and speed of the target and by observing the target with high resolution in one or more dimensions. Propellers and jet engines modify the radar echo from aircraft and can assist in target recognition. The flapping of the wings of a bird in flight produces a characteristic modulation that can be used to recognize that a bird is present or even to distinguish one type of bird from another.

Cross-range resolution obtained from Doppler frequency, along with range resolution, is the basis for synthetic aperture radar (SAR). SAR produces an image of a scene that is similar, but not identical, to an optical photograph. One should not expect the image seen by radar “eyes” to be the same as that observed by optical eyes. Each provides different information. Radar and optical images differ because of the large difference in the frequencies involved; optical frequencies are approximately 100,000 times higher than radar frequencies.

SAR can operate from long range and through clouds or other atmospheric effects that limit optical and infrared imaging sensors. The resolution of a SAR image can be made

independent of range, an advantage over passive optical imaging where the resolution worsens with increasing range. Synthetic aperture radars that map areas of the Earth’s surface with resolutions of a few metres can provide information about the nature of the terrain and what is on the surface.

A SAR operates on a moving vehicle, such as an aircraft or spacecraft, to image stationary objects or planetary surfaces. Since relative motion is the basis for the Doppler resolution, high resolution (in cross range) also can be accomplished if the radar is stationary and the target is moving. This is called inverse synthetic aperture radar (ISAR). Both the target and the radar can be in motion with ISAR.

A basic radar system

The figure shows the basic parts of a typical radar system. The transmitter generates the high-power signal that is radiated by the antenna. In a sense, an antenna acts as a “transducer” to couple electromagnetic energy from the transmission line to radiation in space, and vice versa. The duplexer permits alternate transmission and reception with the same antenna; in effect, it is a fast-acting switch that protects the sensitive receiver from the high power of the transmitter.

The receiver selects and amplifies radar echoes so that they can be displayed on a television-like screen for the human operator or be processed by a computer. The signal processor separates the signals reflected by possible targets from unwanted clutter. Then, on the basis of the echo’s exceeding a predetermined value, a human operator or a digital computer circuit decides whether a target is present.

Once it has been decided that a target is present and its location (in range and angle) has been determined, the track of the target can be obtained by measuring the target location at different times. During the early days of radar, target tracking was performed by an operator marking the location of the target “blip” on the face of a cathode-ray tube (CRT) display with a grease pencil. Manual tracking has been largely replaced by automatic electronic tracking, which can process hundreds or even thousands of target tracks simultaneously.

The system control optimizes various parameters on the basis of environmental conditions and provides the timing and reference signals needed to permit the various parts of the radar to operate effectively as an integrated system. Further descriptions of the major parts of a radar system are given below.esirable, particularly since narrow beamwidths could be achieved without excessively large antennas.

Factors affecting radar performance

The performance of a radar system can be judged by the following: (1) the maximum range at which it can see a target of a specified size, (2) the accuracy of its measurement of target location in range and angle, (3) its ability to distinguish one target from another, (4) its ability to detect the desired target echo when masked by large clutter echoes, unintentional interfering signals from other “friendly” transmitters, or intentional radiation from hostile jamming (if a military radar), (5) its ability to recognize the type of target, and (6) its availability (ability to operate when needed), reliability, and maintainability. Some of the major factors that affect performance are discussed in this section

Examples of radar systems

For many years radar has been used to provide information about the intensity and extent of rain and other forms of precipitation. This application of radar is well known in the United States from the familiar television weather reports of precipitation observed by the radars of the National Weather Service. A major improvement in the capability of weather radar came about when engineers developed new radars that could measure the Doppler frequency shift in addition to the magnitude of the echo signal reflected from precipitation. The Doppler frequency shift is important because it is related to the radial velocity of the precipitation blown by wind (the component of the wind moving either toward or away from the radar installation). Since tornadoes, mesocyclones (which spawn tornadoes), hurricanes, and other hazardous weather phenomena tend to rotate, measurement of the radial wind speed as a function of viewing angle will identify rotating weather patterns. (Rotation is indicated when the measurement of the Doppler frequency shift shows that the wind is coming toward the radar at one angle and away from it at a nearby angle.)

The pulse Doppler weather radars employed by the National Weather Service, which are known as Nexrad, make quantitative measurements of precipitation, warn of potential flooding or dangerous hail, provide wind speed and direction, indicate the presence of wind shear and gust fronts, track storms, predict thunderstorms, and provide other meteorological information. In addition to measuring precipitation (from the intensity of the echo signal) and radial speed (from the Doppler frequency shift), Nexrad also measures the spread in radial speed (difference between the maximum and the minimum speeds) of the precipitation particles within each radar resolution cell. The spread in radial speed is an indication of wind turbulence.

Another improvement in the weather information provided by Nexrad is the digital processing of radar data, a procedure that renders the information in a form that can be interpreted by an observer who is not necessarily a meteorologist. The computer automatically identifies severe weather effects and indicates their nature on a display viewed by the observer. High-speed communication lines integrated with the Nexrad system allow timely weather information to be transmitted for display to various users.

The Nexrad radar operates at S-band frequencies (2.7 to 3 GHz) and is equipped with a 28-foot- (8.5-metre-) diameter antenna. It takes five minutes to scan its 1 degree beamwidth through 360 degrees in azimuth and from 0 to 20 degrees in elevation. The Nexrad system can measure rainfall up to a distance of 460 km and determine its radial velocity as far as 230 km.

A serious weather hazard to aircraft in the process of landing or taking off from an airport is the downburst, or microburst. This strong downdraft causes wind shear capable of forcing aircraft to the ground. Terminal Doppler weather radar (TDWR) is the name of the type of system at or near airports that is specially designed to detect dangerous microbursts. It is similar in principle to Nexrad but is a shorter-range system since it has to observe dangerous weather phenomena only in the vicinity of an airport. It operates from 5.60 to 5.65 GHz (C band) to avoid interference with the lower frequencies of Nexrad and ASR systemnding or taking off from an airport is the downburst, or microburst. This strong downdraft causes wind shear capable of forcing aircraft to the ground. Terminal Doppler weather radar (TDWR) is the name of the type of system at or near airports that is specially designed to detect dangerous microbursts. It is similar in principle to Nexrad but is a shorter-range system since it has to observe dangerous weather phenomena only in the vicinity of an airport. It operates from 5.60 to 5.65 GHz (C band) to avoid interference with the lower frequencies of Nexrad and ASR system

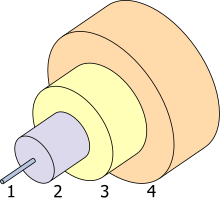

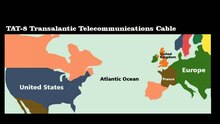

of a second) between when one caller speaks and the other hears. (The fiber in this case will probably travel a longer route, and there will be additional delays due to communication equipment switching and the process of encoding and decoding the voice onto the fiber).

of a second) between when one caller speaks and the other hears. (The fiber in this case will probably travel a longer route, and there will be additional delays due to communication equipment switching and the process of encoding and decoding the voice onto the fiber).